Originally posted at TMNS Blog

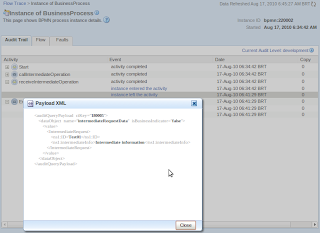

This week I was doing some proof of concept with Oracle Managed File Transfer and exploring in more detail one of its advanced features: custom callouts.

Callouts allow you to execute transfer pre and postprocessing. There are some callouts out-of-the-box for compression, decompression, encryption and decryption. The interesting thing however, is that you can build your own custom callouts. Oracle provides some samples on how to build callouts on the MFT website, see bit.ly/learnmft for details.

RunScriptPost

Based on the RunScriptPre_01.zip provided by Oracle, I started by developing a similar callout, but to be used in the Targetpost action. Below are some highlevel steps on how to do that and a link to the zip file containing all the information you need to test by yourself:

- Create a java class (RunScriptPost.java) that implements the oracle.tip.mft.engine.processsor.plugin.PostCalloutPlugin interface

- Compile the class, package it in a jar file (RunScriptPost.jar) and copy to $DOMAIN_HOME/mft/callouts/

- Create a callout definition file (RunScriptPost.xml) to describe the callout

- Run the createCallouts WLST command (createCallout.py) to configure the callout in MFT

Once you complete the steps above, the callout will be available in your MFT Design to be selected in the “add postprocessing actions” screen of your Transfers.

Check the README.txt file for a detailed description on how to deploy, configure and test it.

Download: RunScriptPost

Check the README.txt file for a detailed description on how to deploy, configure and test it.